Database Migrations

Django migrations are how we handle changes to the database in Sentry.

Django migration official docs: https://docs.djangoproject.com/en/2.2/topics/migrations/ . These will cover most things you need to understand what a migration is doing.

Commands

Note that for all of these commands you can substitute getsentry for sentry if in the getsentry repo.

Upgrade your database to latest

sentry upgrade will automatically bring your migrations up to date. You can also run sentry django migrate to access the migration command directly.

Move your database to a specific migration

This can be helpful for when you want to test a migration.

sentry django migrate <app_name> <migration_name> - Note that migration_name can be a partial match, often the number is all you need.

eg: sentry django migrate sentry 0005

This can be used to roll a migration back as well. Useful in dev if you make a mistake.

Produce SQL for a migration

A GitHub action will automatically comment on your PR with the SQL for your migration, and the comment will stay updated with any future changes. You can also manually generate SQL with this command.

sentry django sqlmigrate <app_name> <migration_name>

eg sentry django sqlmigrate sentry 0003

Generate Migrations

This generates migrations for you automatically based on changes you've made to models.

sentry django makemigrations

or

sentry django makemigrations <app_name> for a specific app.

eg sentry django makemigrations sentry

You can also generate an empty migration with sentry django makemigrations <app_name> --empty. This is useful for data migrations and other custom work.

Note that if you have added a new model, you also need to import the model in __init__.py, or the model will not be recognized in testing.

Merging migrations to master

When merging to master you might notice a conflict with migrations_lockfile.txt. This file is in place to help us avoid merging two migrations with the same migration number to master, and if you're conflicting with it then it's likely someone has committed a migration ahead of you.

To resolve this, rebase against latest master, delete your current migration and then regenerate it. If your migration was custom, just save the operations in a text file somewhere so that you can reapply them on the regenerated migration.

Always commit the changes to migrations_lockfile.txt with your migration.

Guidelines

There are some things we need to be careful about when running migrations.

Testing

Database migrations are risky operations that can lead to irreversible data loss or corruption. This is especially true for data migrations. For this reason, every migration should have a corresponding integration test.

To test your migration, derive a test case from TestMigrations and add it to tests/sentry/migrations.

For example:

class MyMigrationTest(TestMigrations):

migrate_from = "0123_previous_migration"

migrate_to = "0124_my_new_migration"

def setup_before_migration(self, apps):

# Create your db state here

Project = apps.get_model("sentry", "Project")

self.project = Project.objects.create(organization_id=self.organization.id, name="my_project")

def test(self):

# Test state after migration

self.project.refresh_from_db()

assert self.project.name == "MyProject"

To run the test locally, run pytest with --migrations flag. For example, pytest -v --migrations tests/getsentry/migrations/test_0XXX_migration_name.py.

Notes

- There is a known issue with the

django-pg-zero-downtime-migrationspackage which causes the roll back of aNOT NULLconstraint to fail. If this happens with an old migration test, it's ok to delete the test rather than trying to fix the issue. - If you want to use existing

create_*helper functions to create model instances, overridesetup_initial_staterather thansetup_before_migration. This function will run before the database is rolled back tomigration_from.

Filters

If a (data) migration involves large tables, or columns that aren't indexed it is better to iterate over the entire table instead of using a filter. For example:

EnvironmentProject.objects.filter(environment__name="none")Because there are too many EnvironmentProject rows, this will bring too many rows into memory at once.

Instead we should iterate over all the EnvironmentProject rows using RangeQuerySetWrapperWithProgressBar since it will do it in chunks.

For example:

for env in RangeQuerySetWrapperWithProgressBar(EnvironmentProject.objects.all()):

if env.name == 'none':

# Do what you needWe generally prefer to avoid using .filter with RangeQuerySetWrapperWithProgressBar. Since it already orders by the id to iterate through the table,

we can't take advantage of any indexes on the fields, and could potentially scan a large number of rows for each chunk. This will run slower, but we

generally prefer that, since it averages the load out over a longer period of time, and makes each query to fetch each chunk fairly cheap.

Indexes

We prefer to create indexes on large existing tables with CREATE INDEX CONCURRENTLY. Our migration framework will do this automatically when creating

a new index. Note that when CONCURRENTLY is used we can't run the migration in a transaction, so it's important to use atomic = False to run these.

When adding indexes to large tables you should use a is_post_deployment migration as creating the index could take longer than the migration statement timeout of 5s.

Deleting columns

This is complicated due to our deploy process. When we deploy, we run migrations, and then push out the application code, which takes a while. This means that if we just delete a column or model, then code in sentry will be looking for those columns/tables and erroring until the deploy completes. In some cases, this can mean Sentry is hard down until the deploy is finished.

To avoid this, follow these steps:

- Mark the column as nullable if it isn't, and create a migration. (ex.

BoundedIntegerField(null=True)) - Deploy.

- Remove the column from the model, but in the migration make sure we only mark the state as removed.

- Deploy.

- Finally, create a migration that deletes the column.

Here's an example of removing columns that were already nullable. First we remove the columns from the model, and then modify the migration to only update the state and make no database operations.

operations = [

migrations.SeparateDatabaseAndState(

database_operations=[],

state_operations=[

migrations.RemoveField(model_name="alertrule", name="alert_threshold"),

migrations.RemoveField(model_name="alertrule", name="resolve_threshold"),

migrations.RemoveField(model_name="alertrule", name="threshold_type"),

],

)

]Once this is deployed, we can then deploy the actual column deletion. This pr will have only a migration, since Django no longer knows about these fields. Note that the reverse SQL is only for dev, so it's fine to not assign a default or do any sort of backfill:

operations = [

migrations.SeparateDatabaseAndState(

database_operations=[

migrations.RunSQL(

"""

ALTER TABLE "sentry_alertrule" DROP COLUMN "alert_threshold";

ALTER TABLE "sentry_alertrule" DROP COLUMN "resolve_threshold";

ALTER TABLE "sentry_alertrule" DROP COLUMN "threshold_type";

""",

reverse_sql="""

ALTER TABLE "sentry_alertrule" ADD COLUMN "alert_threshold" smallint NULL;

ALTER TABLE "sentry_alertrule" ADD COLUMN "resolve_threshold" int NULL;

ALTER TABLE "sentry_alertrule" ADD COLUMN "threshold_type" int NULL;

""",

hints={"tables": ["sentry_alertrule"]},

)

],

state_operations=[],

)

]Deleting Tables

Extra care is needed here if the table is referenced as a foreign key in other tables. In that case, first remove the foreign key columns in the other tables, then come back to this step.

- Remove any database level foreign key constraints from this table to other tables by setting

db_constraint=Falseon the columns. - Deploy

- Remove the model and all references from the sentry codebase. Make sure that the migration only marks the state as removed.

- Deploy.

- Create a migrations that deletes the table.

- Deploy

Here's an example of removing this model:

class AlertRuleTriggerAction(Model):

alert_rule_trigger = FlexibleForeignKey("sentry.AlertRuleTrigger")

integration = FlexibleForeignKey("sentry.Integration", null=True)

type = models.SmallIntegerField()

target_type = models.SmallIntegerField()

# Identifier used to perform the action on a given target

target_identifier = models.TextField(null=True)

# Human readable name to display in the UI

target_display = models.TextField(null=True)

date_added = models.DateTimeField(default=timezone.now)

class Meta:

app_label = "sentry"

db_table = "sentry_alertruletriggeraction"First we checked that it's not referenced by any other models, and it's not. Next we need to remove and db level foreign key constraints. To do this, we change these two columns and generate a migration:

alert_rule_trigger = FlexibleForeignKey("sentry.AlertRuleTrigger", db_constraint=False)

integration = FlexibleForeignKey("sentry.Integration", null=True, db_constraint=False)The operations in the migration look like

operations = [

migrations.AlterField(

model_name='alertruletriggeraction',

name='alert_rule_trigger',

field=sentry.db.models.fields.foreignkey.FlexibleForeignKey(db_constraint=False, on_delete=django.db.models.deletion.CASCADE, to='sentry.AlertRuleTrigger'),

),

migrations.AlterField(

model_name='alertruletriggeraction',

name='integration',

field=sentry.db.models.fields.foreignkey.FlexibleForeignKey(db_constraint=False, null=True, on_delete=django.db.models.deletion.CASCADE, to='sentry.Integration'),

),

]And we can see the sql it generates just drops the FK constaints

BEGIN;

SET CONSTRAINTS "a875987ae7debe6be88869cb2eebcdc5" IMMEDIATE; ALTER TABLE "sentry_alertruletriggeraction" DROP CONSTRAINT "a875987ae7debe6be88869cb2eebcdc5";

SET CONSTRAINTS "sentry_integration_id_14286d876e86361c_fk_sentry_integration_id" IMMEDIATE; ALTER TABLE "sentry_alertruletriggeraction" DROP CONSTRAINT "sentry_integration_id_14286d876e86361c_fk_sentry_integration_id";

COMMIT;So now we deploy this and move onto the next stage.

The next stage involves removing all references to the model from the codebase. So we do that, and then we generate a migration that removes the model from the migration state, but not the database. The operations in this migration look like

operations = [

migrations.SeparateDatabaseAndState(

state_operations=[migrations.DeleteModel(name="AlertRuleTriggerAction")],

database_operations=[],

)

]and the generated SQL shows no database changes occurring. So now we deploy this and move into the final step.

In this last step, we just want to manually write DDL to remove the table. So we use sentry django makemigrations --empty to produce an empty migration, and then modify the operations to be like:

operations = [

migrations.RunSQL(

"""

DROP TABLE "sentry_alertruletriggeraction";

""",

reverse_sql="CREATE TABLE sentry_alertruletriggeraction (fake_col int)", # We just create a fake table here so that the DROP will work if we roll back the migration.

)

]Then we deploy this and we're done.

Foreign Keys

Creating foreign keys is mostly fine, but for some large/busy tables like Project, Group it can cause problems due to difficulties in acquiring a lock. You can still create a Django level foreign key though, without creating a database constraint. To do so, set db_constraint=False when defining the key.

Renaming Tables

Renaming tables is dangerous and will result in downtime. The reason this occurs is that during the deploy a mix of old/new code will be running. So once we rename the table in Postgres, the old code will immediately start erroring if it attempts to access it. There are two ways to handle renaming a table:

- Don't rename the table in Postgres. Instead, just rename the model in Django, and make sure

Meta.db_tableis set to the current tablename so that nothing breaks. This is the preferred method. - If you absolutely want to rename the table, then the steps would be:

- Create a table with the new name

- Start dual-writing to both the old and new table, ideally in a transaction.

- Backfill the old rows into the new table.

- Change the model to start reading from the new table.

- Stop writing to the old table and remove references from the code.

- Drop the old table.

- Generally, this is not worth doing and a lot of risk/effort compared to the reward.

Adding Columns

With postgres 14, columns can be added to tables of all sizes as deploy time migrations if you follow the guidelines on default values & allowing nulls. When creating new columns they should either be:

- Not null with a default. https://develop.sentry.dev/database-migrations/#adding-not-null-to-columns

- Created as nullable. If no default value can be set on the column, then it's best just to make it nullable.

Adding Columns With a Default

We run Postgres 14 in production. This means that we can now safely add columns with a default without worrying about rewriting the table. We still need to be careful though.

Django's default behaviour for creating a new not null column with a default is dangerous. When adding a default, in the migrations Django will add the default to backfill all fields, then immediately remove it so that it can handle them in the app layer. This means that during a deploy, the column is sitting in production without a default until all code rolls out. This means that inserts will fail for this table until the deploy completes.

To work around this, you can tell your migration to leave the default in place in Postgres.

operations = [

migrations.SeparateDatabaseAndState(

database_operations=[

migrations.RunSQL(

"""

ALTER TABLE "sentry_groupedmessage" ADD COLUMN "type" integer NOT NULL DEFAULT 1;

""",

reverse_sql="""

ALTER TABLE "sentry_groupedmessage" DROP COLUMN "type";

""",

hints={"tables": ["sentry_groupedmessage"]},

),

],

state_operations=[

migrations.AddField(

model_name="group",

name="type",

field=sentry.db.models.fields.bounded.BoundedPositiveIntegerField(default=1),

),

],

)

]Adding Not Null To Columns

It can be dangerous to add not null to columns, even if there is data in every row of the table for that column. This is because Postgres still needs to perform a not null check on all rows before it can add the constraint. On small tables this can be fine since the check will be quick, but on larger tables this can cause downtime. There are a few options here to make this safe:

ALTER TABLE tbl ADD CONSTRAINT cnstr CHECK (col IS NOT NULL) NOT VALID;

ALTER TABLE tbl VALIDATE CONSTRAINT cnstr;One approach is to create the constraint as not valid. Then we validate it afterwards. We still need to scan the whole table to validate, but we only need to hold a SHARE UPDATE EXCLUSIVE lock, which only blocks other ALTER TABLE commands, but will allow reads/writes to continue. This works well, but has a slight performance penalty of 0.5-1%. After Postgres 12 we can extend this method to add a real NOT NULL constraint.

Alternatively, if the table is small enough and has low enough volume it should be safe to just create a normal NOT NULL constraint. Small being a few million rows or less.

Altering Column Types

Altering the type of a column is usually dangerous, since it will require a whole table rewrite. There are some exceptions:

- Altering a

varchar(<size>)to avarcharwith a larger size. - Altering any

varchartotext - Altering a

numericto anumericwhere theprecisionis higher but thescaleis the same.

For any other types, the best path forward is usually:

- Create a column with the new type

- Start dual-writing to both the old and new column.

- Backfill and convert the old column values into the new column.

- Change the code to use the new field.

- Stop writing to the old column and remove references from the code.

- Drop the old column from the database.

Generally this can be worth a discussion in #discuss-backend.

Renaming Columns

Renaming columns is dangerous and will result in downtime. The reason this occurs is that during the deploy a mix of old/new code will be running. So once we rename the column in Postgres, the old code will immediately start erroring if it attempts to access it. There are two ways to handle renaming a column:

- Don't rename the column in Postgres. Instead, just rename the field in Django, and use

db_columnin the definition to set it to the existing column name so that nothing breaks. This is the preferred method. - If you absolutely want to rename the column, then the steps would be:

- Create a column with the new name

- Start dual-writing to both the old and new column.

- Backfill the old column values into the new column.

- Change the field to start reading from the new column.

- Stop writing to the old column and remove references from the code.

- Drop the old column from the database.

- Generally, this is not worth doing and a lot of risk/effort compared to the reward.

Siloed Database Management

The local database for siloed servers is separate from the database used for monolith operations. The siloed databases are named region and control matching the silo modes. Within django, the default connection is for the region database, and the control connection is for the control database. The same database names are used by both sentry and getsentry.

Cloning a monolith database

# Copy your existing application data into the split databases.

bin/split-silo-database --database sentry --reset

# When working with getsentry run the following from getsentry root directory

bin/split-silo-database --database getsentry --resetThere is a script in both sentry & getsentry that are functionally equivalent. If you are working on getsentry, you need to use the getsentry script to ensure that all of your tables end up in the correct siloed database.

The split-silo-database scripts use silo annotations on models to selectively dump your monolith database into the siloed databases.

Apply Migrations to siloed databases

You have two options for maintaining siloed databases:

- Run migrations on your monolith database, and use

split-silo-databaseto rebuild your siloed databases. - Run migrations for siloed databases.

To run migrations on the siloed databases, run migrations in region and control mode.

# Run migrations for region mode

SENTRY_SILO_DEVSERVER=1 SENTRY_SILO_MODE=REGION SENTRY_REGION=us getsentry upgrade

# Run migrations for control

SENTRY_SILO_DEVSERVER=1 SENTRY_SILO_MODE=CONTROL getsentry upgradeMigration Deployment

Employees Only

We support two kinds of migrations in our saas deployments:

- Deploy time migrations

- Post-deploy migrations

Deployment Migrations

Deployment migrations are run in each region and tenant before code is deployed. Deployment migrations are expected to finish quickly and all statements must complete within 5 seconds. If a migration could take longer because a large number of rows is being operated on, it should be deployed as a post-deploy migration instead. Deployment migrations are ideal for:

- Adding new tables and columns.

- Adding indexes to most tables.

- Removing columns and tables - as long as you follow the processes outlined above.

Post-deploy migrations (is_post_deployment)

Post-deploy migrations are run manually by engineers after a migration has been deployed to all regions. During deployment, post-deploy migrations are marked as complete (faked) with django's fake migration behavior. When a post-deploy migration is run, it is run against all regions and tenants. Post-deploy migrations are triggered manually by engineers.

Post-deploy migrations are ideal for:

- Adding indexes to large tables, where adding the index would take longer than 5 seconds in any given region.

- Doing data backfills or mutations on tables with more than 50,000 rows.

Post-deploy migrations should not be used for:

- Column additions, removals or renames.

- Table creation.

Using post-deploy migrations for these operations will cause an outage.

Running post-deploy migrations

Post-deploy migrations (both data and schema) are now run through a GoCD

pipeline named

post-deploy-migrations.

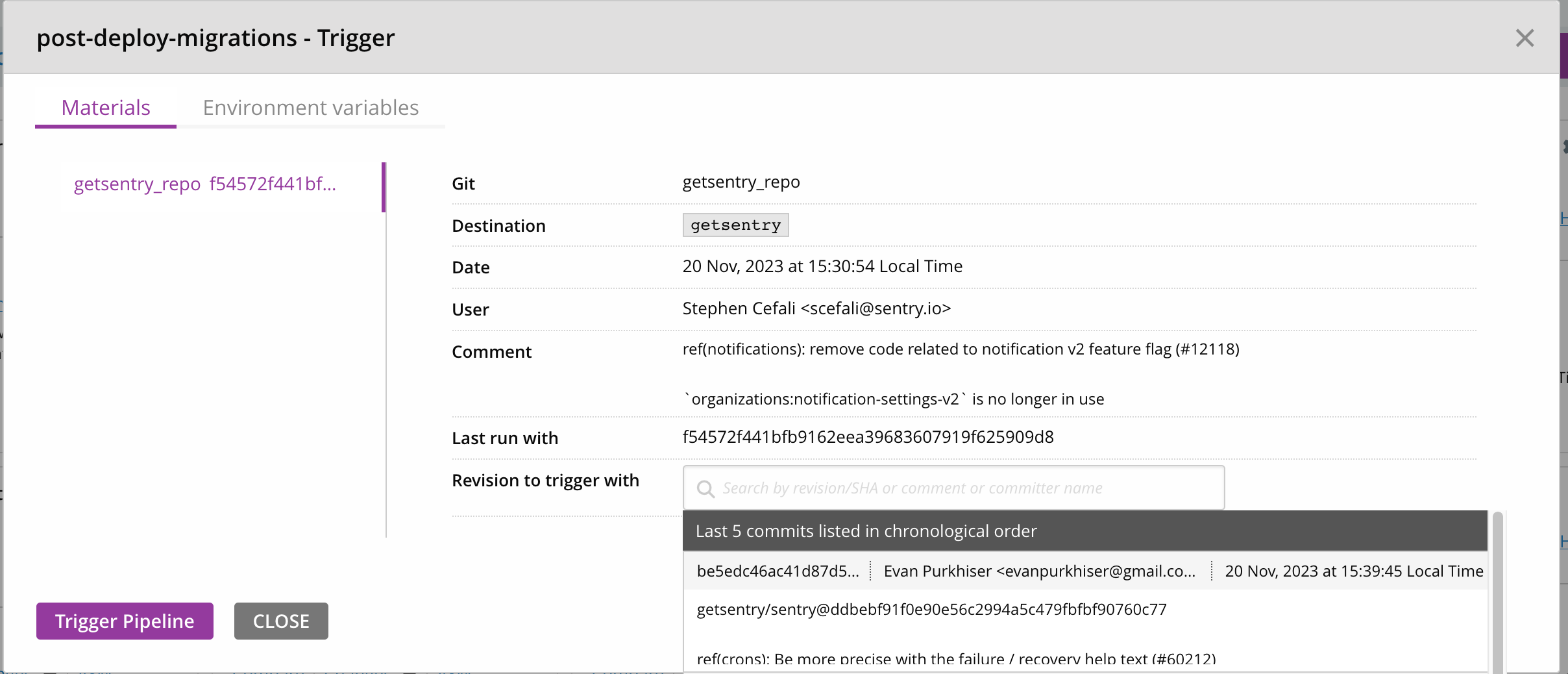

To run a post-deploy migration, first locate the post-deploy-migrations job. Click on the play button:

Under Materials, input the getsentry SHA you want to run migrations from. The sha you choose should be one that contains the migration, and has been deployed to all regions.

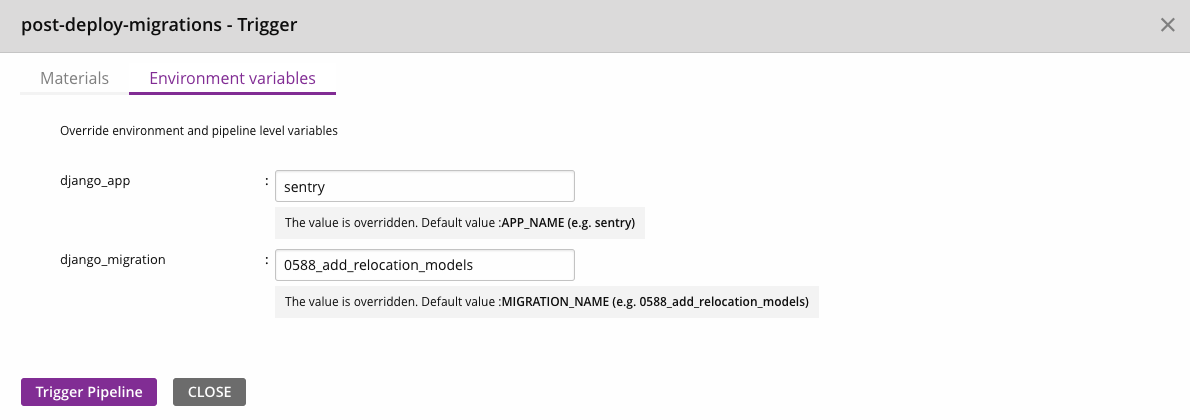

Then click Environment Variables and fill in a value for django_app and django_migration. The django_app should match the app name containing the migration. e.g. sentry, getsentry. Next, input the name of the migration to run, e.g. 0233_pickle_to_json_admin_auditlogentry.

Finally, click the Trigger Pipeline button.

Cancelling a post-deploy migration

Just cancel the GoCD stage.

We don't have tooling to reverse migrations, so generally we introduce another migration which reverse the migration we want to reverse and run that.

Cancelling a DDL/schema Migration

GoCD won’t always be able to kill a long-running query - so instead we'll need to find the query run in pg_stat_activity and kill it using pg_terminate_backend(pid). This will require assistance from SRE.